Written in collaboration with Nick Gibson, CRO Director at Linear.

Are you trying to optimize a landing page? I bet you’re pretty impatient to start testing ASAP.

I’m going to stop you right there, though.

You’ll realize pretty soon that the testing possibilities are endless.

Drowning in a list of test ideas, you’ll waste resources testing the wrong things or comparing options arbitrarily.

Instead, focus on prioritization .

How? We use The ICE Method .

Below, we cover how to use this framework for landing page optimization.

More specifically, how can you use ICE scoring to reveal which tests to invest in (i.e., ones that create the most return for your business).

Let’s go!

Navigation:

How to Calculate ICE Priority Scores

Pros and Cons of the ICE Framework

Tips and Tricks: ICE Scoring for Landing Page Optimization

Should I Use the ICE Scoring Method?

What is the ICE Method?

The ICE Scoring Method is a simple framework used to rank items in a project backlog (a list of features, tests, optimizations, or other work items included in a project).

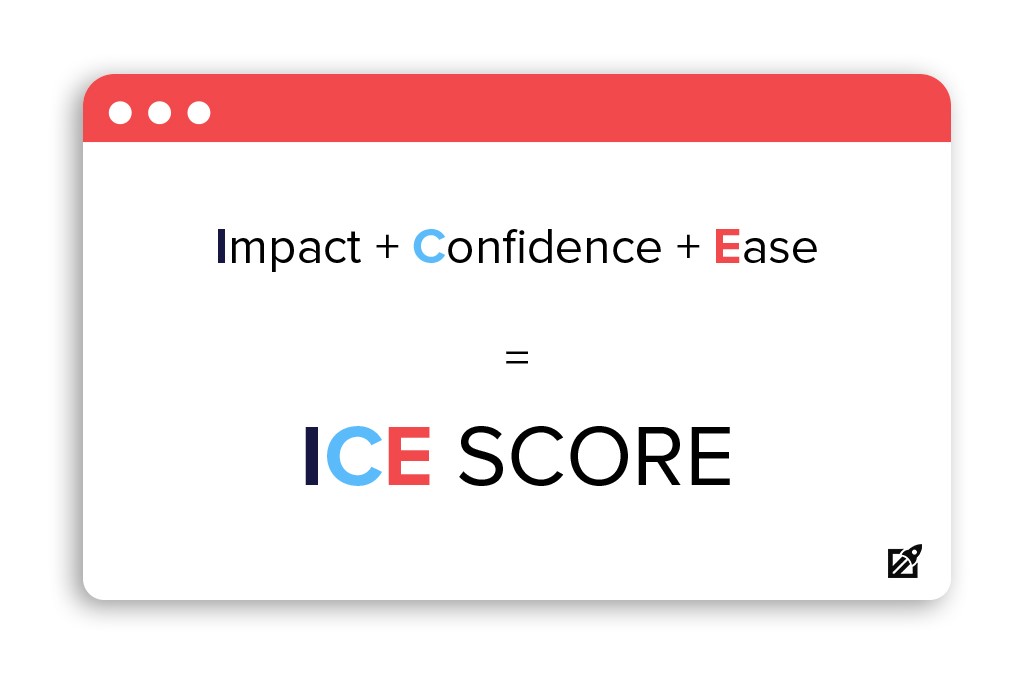

ICE stands for Impact , Confidence , and Ease –the three criteria used to calculate an item’s score. Items with higher scores rank at the top of the backlog.

We use the ICE method because it helps us ask the most important questions. In particular:

- Impact: How much will this help our overall conversion goal?

- Confidence: Based on the data I’ve collected, will this test succeed?

- Ease: How easy (and inexpensive) is this test to implement?

And while not perfect (more on ICE score pros and cons later), prioritization frameworks like the ICE method can help teams make more intentional decisions, avoid brainstorm traps, and make incremental improvements grounded in meaningful business goals.

The Goal of ICE Scoring

Generally, prioritization frameworks give you a consistent, systematic way to organize ideas by business or conversion value.

In particular, the goal of ICE scoring for landing pages is to move you through the prioritization stage quickly, and build momentum for testing and experimentation.

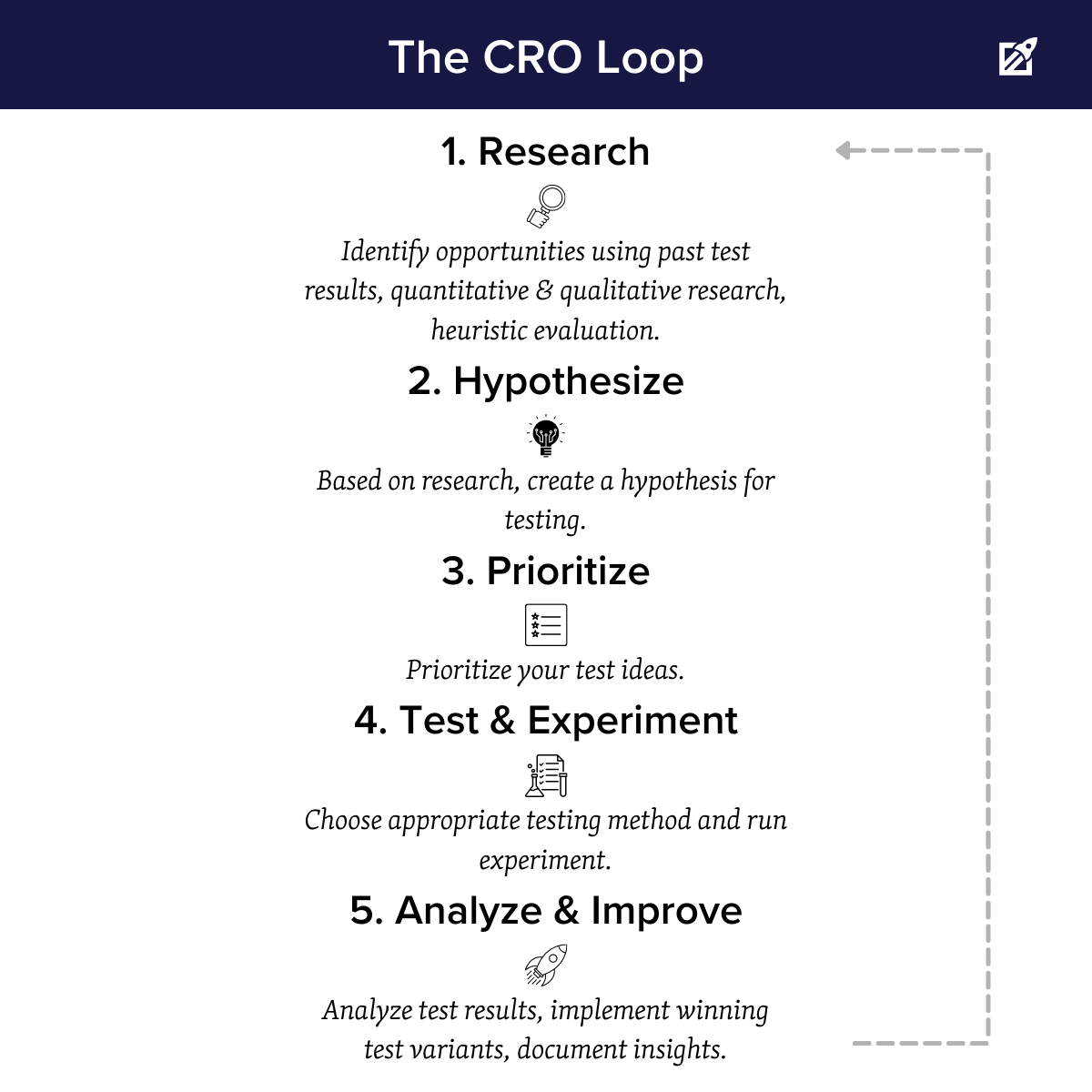

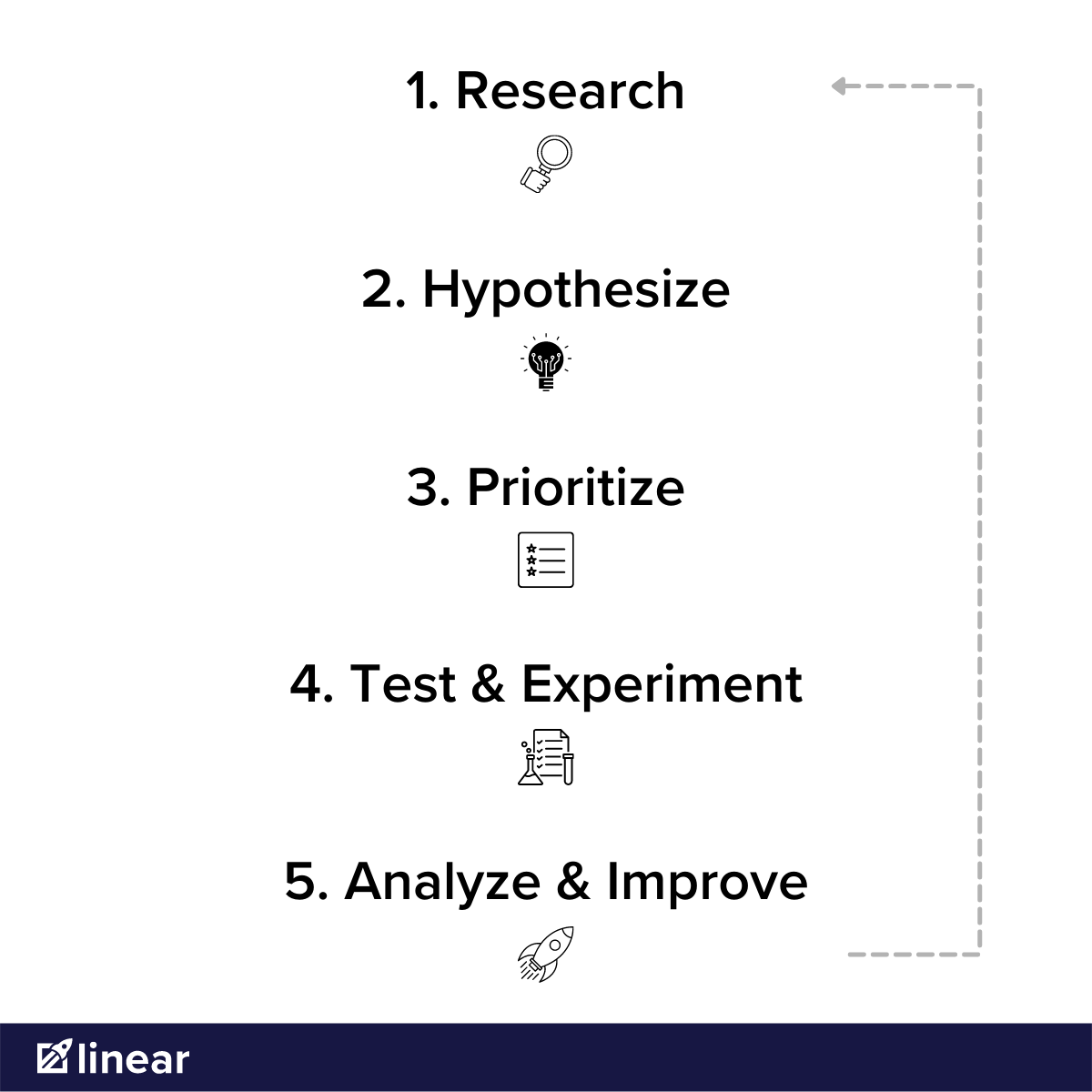

The ICE method can help you get through each stage of the CRO loop efficiently.

When you audit your site, a landing page, or another page element, you’ll end up with a lot of data. Much of this data will include potential improvements and test ideas.

ICE takes the guesswork out about which tests to run first.

When backed by research and a solid hypothesis, you can move to experimentation faster, and create momentum towards a conversion goal.

Examples of ICE Scoring

Now, a little context.

How is the ICE method used in the wild?

- Growth Hacking – using the ICE framework for testing (or rather, growth hacking) was popularized by Sean Ellis of GrowthHackers and is probably the most common way marketers use ICE. With ICE, a team repping multiple departments (e.g., marketing, sales, product, and engineering) can quickly nominate and prioritize a list of 3-5 growth-focused ideas to test each week.

- Features Prioritization – project managers can also use the ICE methodology to ensure items in a product backlog are worth a developer’s time. Features or steps that rank lower can be pushed to a later stage of a product roadmap or eliminated.

- (Linear) Landing Page Optimization – at Linear, we use ICE scoring to organize which optimizations we prioritize for our clients. When our research and hypotheses generate a new list of improvements, ICE quickly bubbles the best ideas to the top of the list.

How to Use The ICE Method for Landing Page Optimization

You’ve got a list of test ideas; now how do you calculate ICE scores for them?

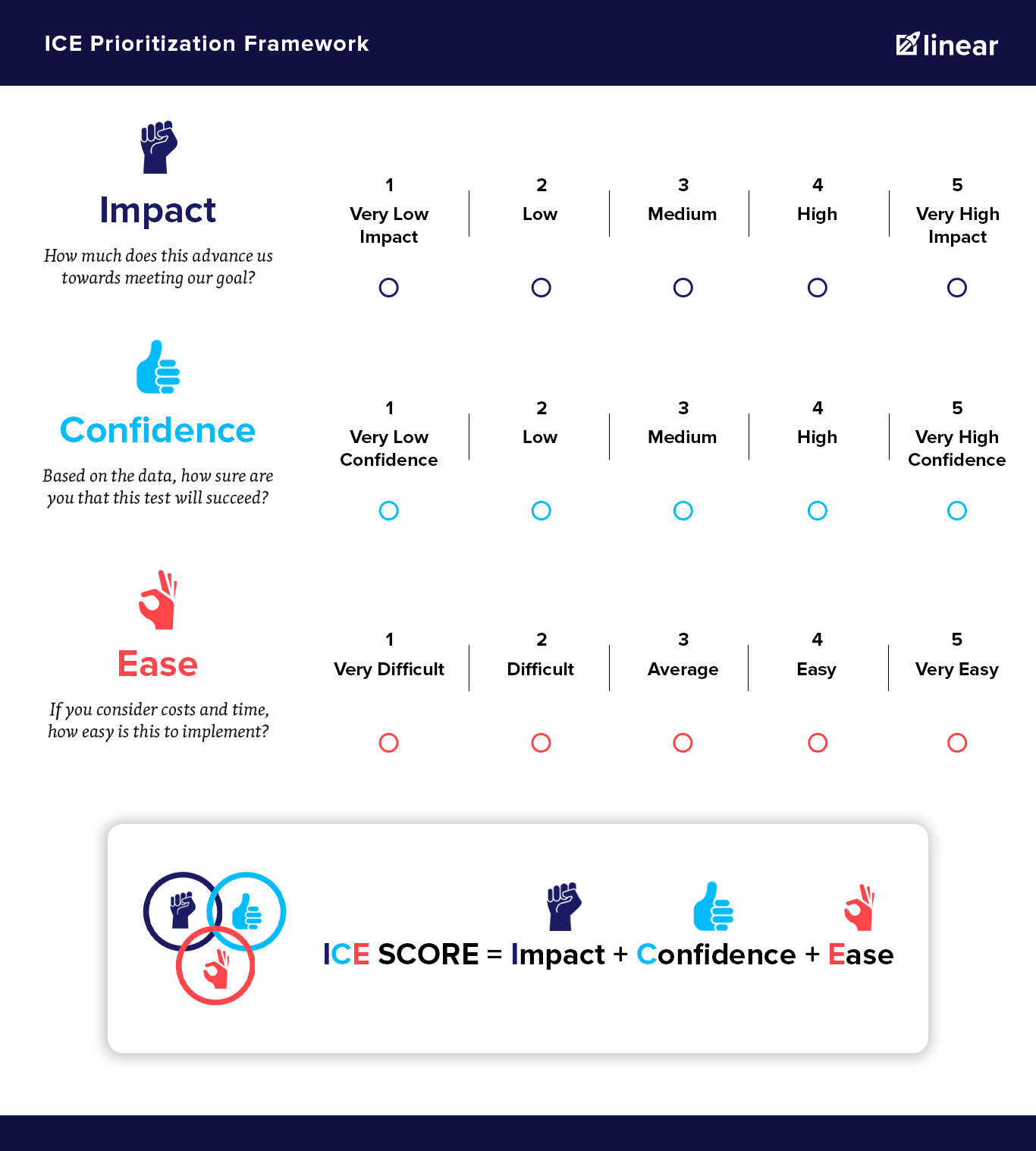

Start by assigning a score between 1 and 5 to each ICE element: impact, confidence, and ease.

A 1 means the idea has low impact, low confidence, or high difficulty.

Assign a 5 if a test is very impactful, high confidence, or high ease. The sum of those 3 criteria, or the total “Priority Score,” can fall between 3-15 points.

For each test, determine your impact, confidence, and ease levels. Add them together to calculate the test’s ICE score (“Priority Score”).

Once you’ve calculated the priority score for each test, you can quickly sort your ideas.

The highest scored tests on the highest value pages represent the most potential value for your business.

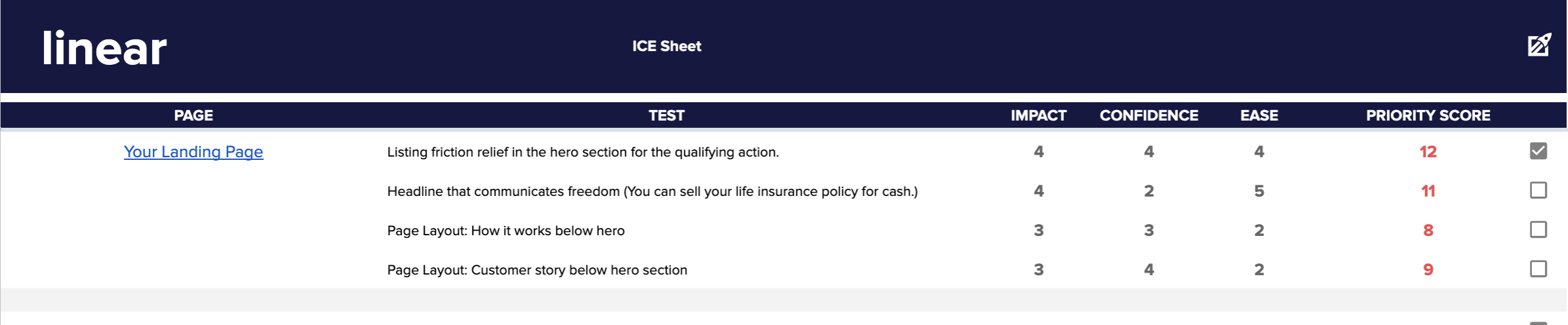

Example scoring on ICE sheet

In this example, each test is given its own line. Impact, Ease, and Confidence were rated and combined into the ICE score in the right column.

But what do the Impact, Confidence, and Ease ratings mean? And how do you decide what score to give them?

We dive into three essential questions to ask before assigning Impact, Confidence, and Ease scores next.

1. Impact

First up is impact , or in other words, how much “reward” is left over after using time and money to complete a test?

The Question to Ask: How far does this help or move us towards meeting our overall conversion goal?

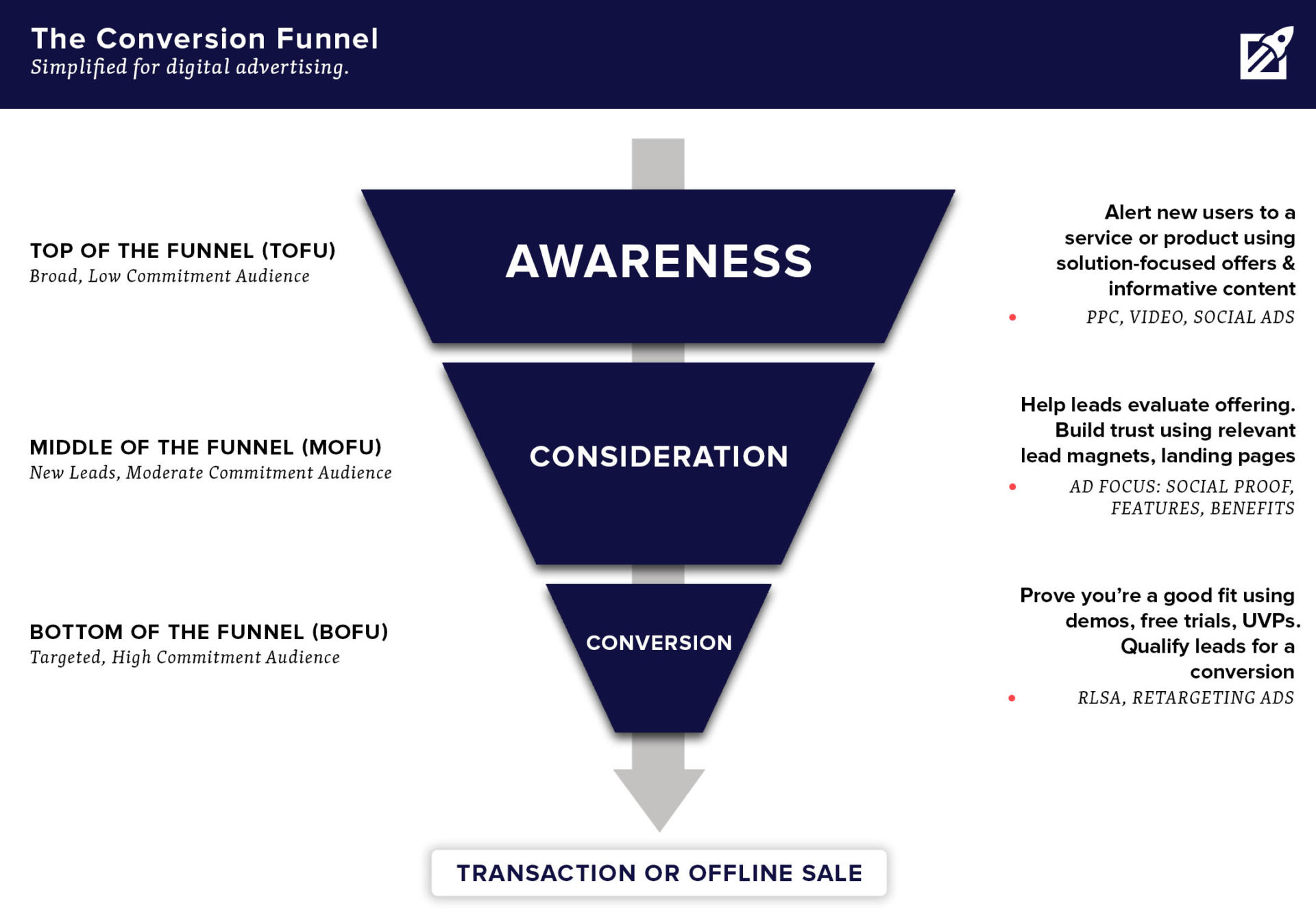

On a large scale, estimates of impact could deal with how effectively an experiment moves users through the marketing conversion funnel.

You can use ads, landing pages, and thank you pages strategically to move users through each funnel stage.

For landing page optimization, we look a little closer.

Each element or proposed landing page change will have elements that drive growth, and a metric we think the driver will impact.

For example, a headline or form we believe could drive a lift in conversion rates. Or maybe a new landing page section that might increase scroll depth.

A high-impact experiment could drive the most significant lift in that goal metric.

2. Confidence

The next ICE score criteria, confidence, is a measure of how much support backs your hypothesis.

The Question to Ask: Based on the data, how sure am I that this test will succeed?

Assigning a high confidence score means that you have a lot of proof to support your hypothesized test outcome.

You can use multiple data sources to support a hypothesis—the more rigorous the data type and the length of testing history warrant a higher confidence score.

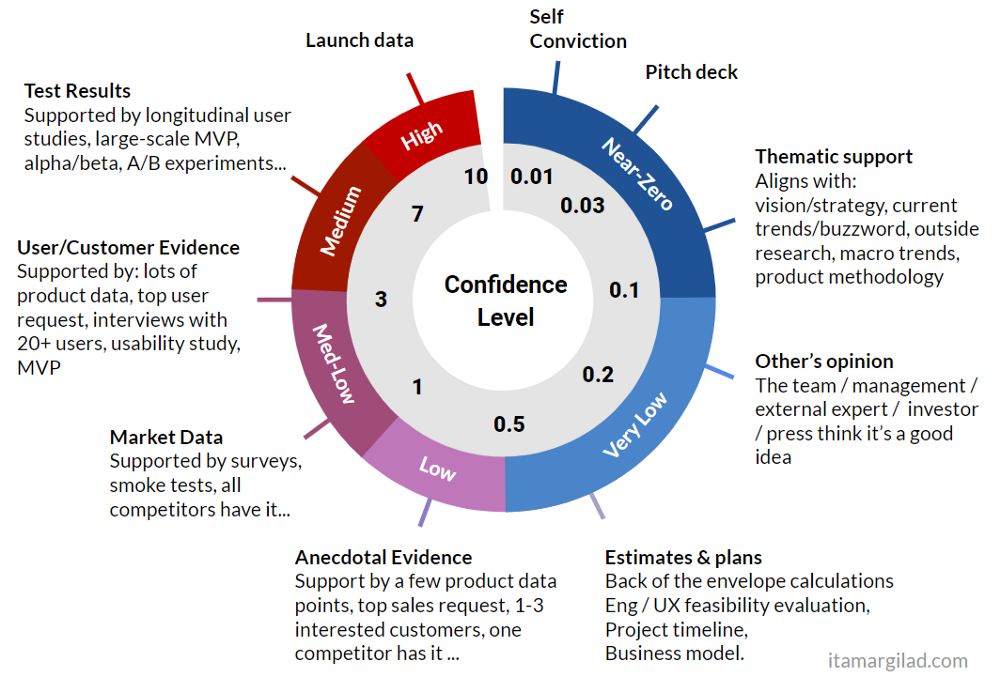

For example, this illustration of a confidence meter.

Notice how formal test results justify higher scores than “hunches” or anecdotal evidence.

The example above is specific to product design, but you can use the principle to design your own “confidence meter.”

If you’re testing for landing pages? Go back to the first two steps in the CRO loop: research and hypothesis.

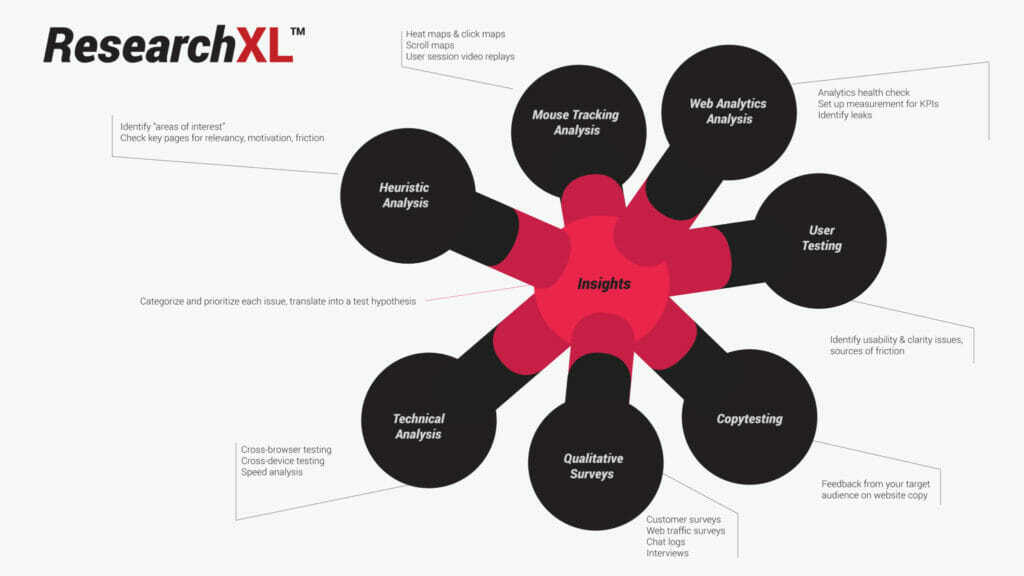

You can then pull data from sources like these to evaluate how much support your testing hypothesis has:

- Previous test results and recommendations for that page

- Test results from similar landing page elements or pages

- CRO audits

- Heuristic analyses

- Web analytics data

- Surveys and voice-of-customer data

- Technical audits

Before we move on to ease, here’s a pro-tip from CRO expert Nick Gibson:

“A confidence rating of 5 does not necessarily align with ‘100% certainty. That would be unrealistic; sometimes, the results just don’t support your hypothesis.

A high confidence score simply means that substantial data supports the hypothesis in similar cases and may resonate with the case in question.”

The takeaway? Don’t worry if a 5/5 confidence test still fails; you can still pull some valuable insights.

Just take a deep breath and loop back around to the next testing cycle.

3. Ease

Ease is simply an estimate of the resources used up performing a test. The fewer resources you need to complete an experiment, the higher the ease score.

The Question to Ask: If you consider costs like time, money, and other resources, how easy is this test to implement?

Factoring in the actual costs of testing is essential. High costs reduce the ROI of even high-impact changes.

Ease is potentially the easiest to quantify, because it considers measurable elements like:

- Duration: How long will this take to implement?

- Expense: Will this test require any additional budget or resources?

- Contract Work: Will this test depend on a developer or external team member to implement?

- Team involvement: How many team members will have to work on or manage this experiment? (measured in % of a department or collective hours)

- Effort: How much of this experiment is automated vs. manual (relevant if a test requires manual analysis or is moderated in person)

We suggest starting as simple as possible (using time estimations or budget spend).

ICE Scoring Template (Steal it!)

Now you have a better understanding of how the ICE methodology works and how to score.

The next step is to calculate a few ICE scores yourself.

To make it easier for you, we’re sharing the same ICE template we use for our clients:

The template includes all the sections shown in the example above, plus an easy prioritization formula to automatically calculate each test’s priority score.

So grab your ICE Sheet and start scoring!

Next up: the pros and cons to consider before adopting ICE. Then, the tips you need to use the ICE method for landing page optimization.

Pros & Cons of the ICE Framework

Let’s weigh the pros and cons of using ICE scores to prioritize landing page tests.

Advantages of ICE Methodology

The immediate advantage of ICE scoring is that it’s quick and easy. It also ensures that you don’t waste time choosing your next experiment.

During the research and hypothesis stages of the CRO loop, a lot of ideas for testing will rise to the surface.

It’s easy to get stuck ideating, which often kills momentum.

You’ll end up a lot like this…

…instead of moving confidently through to the testing and experimentation phase.

Now, that isn’t to say the ICE Method’s simplicity is an advantage when applied to all project types.

But landing page optimization is an iterative process. While driven by hypotheses and past data, experiments don’t land on “perfect” results. Even when tests succeed, it’s just an improvement.

Failed experiments can still inspire valuable insights about your audience or landing page.

ICE scoring is suited to these landing page optimization projects since it prioritizes momentum over exactness.

It’ll help you prioritize tests in the right ‘ballpark’ of your goal while minimizing costs and time spent agonizing over your backlog.

After all, your job isn’t to prioritize prioritization . It’s to test, analyze, and grow!

Drawbacks of using ICE Scoring

The main critique and genuine concern about the ICE method are that the scoring process is subjective.

Without strict, quantitative guidelines around what warrants an impact score of 1 vs. 5, for example, teams could encounter consistency issues. For example:

- Two team members may assign scores differently based on experience levels or other random factors

- One scorer could assign different scores to the same test, or similar tests over multiple days

- Potential for bias and score manipulation to push a particular experiment through

We cover a few ways to maximize scoring consistency in the next section.

The bigger question is, to what degree could ICE inconsistencies hurt your landing page optimization process?

We say not much, as long as you’re applying ICE in a CRO context (more on this later).

Honestly, decreasing your testing volume because you’re obsessing over prioritization hurts your efforts more than small variations in ICE scores.

Do your best to minimize wild inconsistencies and move on!

5 Tips on Applying The ICE Method to Landing Page Optimization

1. Focus on one goal

Identify the goal or heuristic that’s most important to each page.

In other words, don’t try to drive change across too many metrics at once.

Start by identifying each landing page’s primary goal.

Then after you’re clear on the objective for each page, you can more easily determine what tests to focus on and the impact it’ll have on metrics like:

- Conversion rate

- Bounce rate

- Qualified leads

- Scroll depth

Final thought: don’t forget to bring in the people who matter! Align with your marketing agency, team, and stakeholders involved in the growth project. Get clear on the metrics that matter to them and the business.

2. Create a hypothesis-centered testing backlog

What’s the easiest way to produce more winning tests? Creating a better-quality testing backlog.

To do that, you have to start with good data sources.

In other words, your tests won’t yield valuable insights if you use lousy research for your hypotheses.

The ResearchXL model created by Peep Laja of CXL provides a great foundation for data collection.

Once you know how to conduct research effectively, you can use it to fuel really solid hypotheses.

CRO Director Nick Gibson says this about the power of good data and hypotheses:

“It’s useful to keep in mind that the [CRO] process doesn’t end after one test or round of testing, but should provide valuable insights for as long as you can make room for optimization efforts.

Every test you run should have the purpose of opposing or (ideally) supporting a hypothesis. Use the information you get from the test results to guide the next round of optimizations.”

Nick also shared this real-life example to illustrate his point.

Example: The Power of Hypothesis-Centered Testing

Imagine you’re optimizing a landing page for an offer targeting an older demographic.

User research suggests this demographic responds well to hyper-clear, matter-of-fact messaging. However, the current landing page uses messaging that prioritizes emotional resonance instead of clarity.

Before you test, you create this hypothesis: if we change the messaging in the subheader to state “This is a Free Information Guide. No Commitment required.” we will meet the target demographic’s need for clarity, increasing their trust in the offer.

Regardless of the test results, there are two possible outcomes:

- The audience responds more positively to emotional resonance

- The audience responds more positively to clarity.

But what happens when the results come in?

First, record the test’s impact–a 15-20% lift/drop is more impactful than a 3-5% lift/drop.

You’ll note this because the greater the impact, the more confident you can be when using this supporting data when scoring future tests.

More importantly, record the outcome of the test.

For example, if you know your audience positively responds to clear messaging, how can you use that data to optimize other elements of the landing page?

A hypothesis for a single page element can open new testing possibilities for the entire landing page.

3. Document ICE Scores and Test Results

It’s easy to get disorganized or accidentally leave team members out of the loop.

A shared testing sheet will keep testing streamlined and help you with future CRO research.

Your ICE sheet should include:

- Your ICE testing backlog

- A test report (a record of each test you run and its results)

First, the ICE sheet (Get your free template here!)

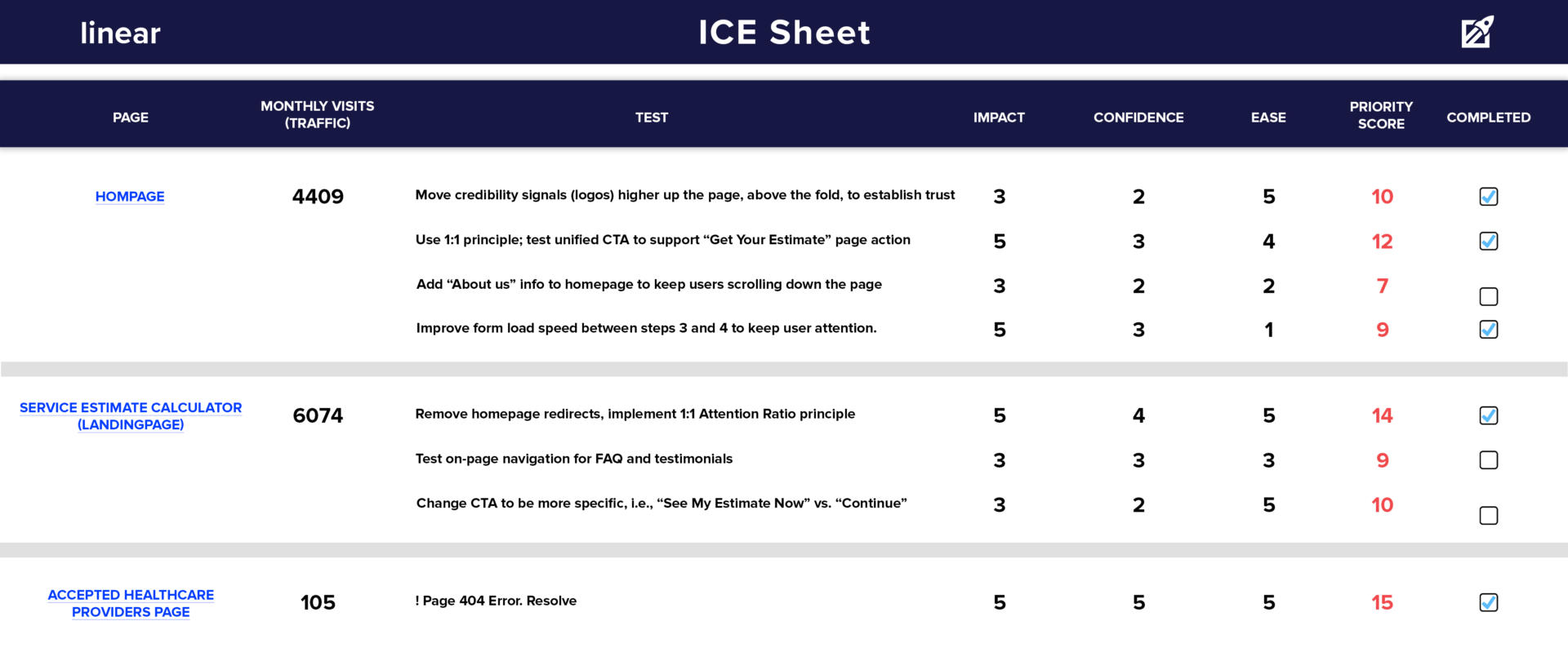

As we mentioned before, organize the most impactful pages at the top of your ICE sheet. Record metrics like page traffic, a test description, and I.C.E. scores in the columns before the final “priority” score.

Pro Tip: try to limit yourself to 2-5 test ideas per page, to begin with.

Next, for each test you run, include a test report. Here’s a basic outline template you can use:

Page: [PAGE NAME]

The page name or title, hyperlinked to the actual page

Date: [DATE RANGE]

A range that includes the start and end dates of your test

TEST NAME:

For example, the second test in the ICE Sheet above, “Removed Redirects 1:1.”

GOAL TYPE:

The focus metric impacted by that specific test/hypothesis. Examples include conversion rate, bounce rate, scroll depth, and qualified leads.

HYPOTHESIS:

A paragraph detailing your prediction, some context on the page element the test is observing, the change applied to the element, and the primary evidence supporting the hypothesis.

[Champion vs. Challenger Section]

For both the challenger (usually the variant) and champion (usually the original) elements, include this information: element title, # of visitors during the testing period, metric volume (e.g., # of conversions), and rate (e.g., conversion rate, bounce rate).

[Results Section]

A section that includes the lift or drop (in %) of the goal metric, the test’s confidence, and whether the hypothesis was supported (Did the challenger win?)

TAKEAWAY:

A paragraph that includes a summary of insights based on your testing results, and recommendations for further testing

4. Don’t Obsess Over ICE Score Consistency

We completely understand why ICE scoring inconsistencies may concern you.

Before you let those concerns slow down the prioritization process again, understand:

Some subjectivity in your ICE scores won’t hurt your CRO process.

Why? Because the growth process (CRO loop) is flexible enough for non-perfect scoring.

Consider…

If you’re entirely new to testing, yes, you don’t have much data to support your hypotheses. There may be some impact or confidence scores you assign on a hunch.

But as you focus on moving through the cycle again, your team’s testing consistency will naturally improve.

After each test, you’ll have actual impact data and evidence to support future research and hypotheses.

Comparing pre-and-post test data will explain why certain tests fail and naturally improve confidence scores.

And when you recall past testing processes, you automatically estimate potential costs before assigning ease scores.

In other words, because the CRO process is naturally iterative, you can move past early scoring missteps quickly, as long as you commit to documenting your test reports.

Pro Tip: Does subjectivity still bother you? Scoring standards can help reduce inconsistencies and scale ICE scoring for a larger team. Some recommendations:

- Assign a final ICE score review to an experienced CRO

- Take an aggregate of multiple team members scores

- Align on a single goal metric per landing page to narrow down test lists to 2-5 ideas

- Update your documentation process, so results from all tests (and insights) are accessible for all team members.

Maximize scoring consistency where you can, and move on!

5. Know when to delegate

The ICE Method is easy enough to use. So is setting up and running tests.

Just remember that tests and prioritization are just two elements of the CRO picture.

Other things you’ll want to figure out include:

- Choosing the correct tests based on sound hypotheses and research

- Drawing the right conclusions from testing data

- Creating recommendations for future tests based on results

Don’t be afraid to reach out to contractors or agencies who know their stuff to help guide the experimentation process.

Wrap Up on Ice Scoring: Should I Use The ICE Score Method?

So, should you use ICE scoring to prioritize your landing page tests?

We’ll give an enthusiastic ‘yes!’…with a few caveats.

No, ICE scoring isn’t the most rigorous prioritization technique. But it “gets the job done,” efficiently transitioning you from ideation to actual testing.

And you can settle concerns about the ICE methods’ subjectivity when you use techniques like aggregate scoring to increase scoring consistency across a large team.

Overall, you can’t use the ICE score optimally if not guided by sound hypotheses or a thorough test reporting process.

So make sure you leave the whole testing process to an experienced team member or your favorite CRO agency. 😉

Ariana Killpack

Director of Content

Ariana prides herself on always learning everything there is to know about pay-per-click advertising and conversion rate optimization, which is why she can create such excellent content. When she’s not writing fantastic content, you can find her hiking, swimming, or baking bread.

Leave us a comment.

Subscribe to our blog

Subscribe to our blog

Get weekly PPC & CRO advice sent straight to your inbox.